Introduction

Most startups don’t fail because the code breaks. They fail because no one wants what the code does. CB Insights found that the single largest cause of startup failure is no market need—nearly half of all post-mortems point to this. If your Minimum Viable Product (MVP) doesn’t prove value quickly, you aren’t building a product; you’re burning runway.

This is a handbook for founders and early product teams who want to use an MVP as an experiment: brief, inexpensive, quantifiable, and targeting one riskiest assumption. Read it, save the checklist, and execute the 4-week plan at the end.

What an MVP Really Is (and Isn't)

An MVP is the minimum product you can deliver to validate a fundamental business hypothesis with actual users. It is not a feature set, a demo, or a prettier beta. An MVP is there to answer the following questions:

- Who will use it?

- What job does it do for them?

- Will they pay, return, or refer?

If your MVP can’t measure at least one of these, you are building a prototype, not running an experiment.

Common Mistakes

Mistake 1: Mixing features with hypotheses

The issue: You create a bare-bones version of the final product and hope people will tell you what’s important.

Why it fails: Features hide causality. You don’t know what part of the product caused the signal you received.

Solutions:

Write a single hypothesis: Who needs, metric (e.g., small business owners will pay $X/month, and conversion will be > 5%).

Create only the flow that generates that metric.

Use fake doors and explainer pages to test interest before you write a single line of code.

Mistake 2: Omitting structured validation

The issue: Founders rely on their gut feeling and omit interviews and cheap tests, thinking they aren’t a good use of their time.

Why it fails: You’ll likely optimize for the wrong customer or the wrong problem.

How to validate quickly:

Interview 12 target users with behavior-driven questions.

Ship a one-page landing page with two different value propositions and track sign-ups, click-through rates, and cost-per-lead.

Provide a pre-order, pilot, or waitlist to measure commitment.

Data point: Use public industry post-mortems when you need benchmarks. Absence of market demand is the most frequent cause of startup failure. (View the CB Insights report linked below.)

Mistake 3: Overengineering the stack

The issue: You spend months deciding on microservices, infrastructure, or an innovative architecture.

Why it fails: This increases your time-to-learn and expenses.

What to do:

Start with the simplest stack that records your metric (no-code, server-rendered, or a single-page app).

Isolate your business logic so you can re-platform down the road.

Document data models and edge cases, even for prototypes.

Mistake 4: Shipping without measurement

The issue: Launching without instrumentation makes getting feedback a wild guess.

Key metrics to instrument from day one:

Activation funnel: landing page → sign-up → first key action.

Week 1 retention and cohort behavior.

Revenue conversion or willingness-to-pay signals.

Actionable step: Add passive and active feedback: analytics events plus one-question exit surveys where users fall off.

Mistake 5: Chasing vanity metrics

The issue: Founders prioritize downloads or incoming traffic but neglect engagement and retention.

Trade vanities for value metrics:

Vanities: downloads, raw sign-ups, page views.

Value Metrics: activation rate, time-to-first-value, Week 1 retention, conversion-to-paid, revenue per active user.

Decision rule: Use metrics that respond to whether users receive the value you promised.

Mistake 6: Poor UX in the core flow

The issue: Founders deprioritize usability for the MVP to save time.

Why it fails: Poor user experience introduces noise to tests and masks actual demand.

Minimum usable product rule:

Ship a whole, functional core flow—even if the rest is manual or gated.

Conduct five “guerrilla usability tests” and address the top three blockers before scaling.

Mistake 7: No scaling path

The issue: You treat the MVP as a throwaway with no design for how it will change.

Why it fails: When demand materializes, teams rush to redesign contracts and data models.

What to prepare for:

Keep contracts minimal and specific.

Use API-first patterns where it makes sense.

Mark technical debt and specify owners and deadlines.

Mistake 8: Aiming for perfection

The issue: Perfectionism hinders learning.

Solution: Ship the minimal, testable thing that confirms or refutes your hypothesis. Real product-market fit comes from iteration, not polish.

Mistake 9: Leaving out the go-to-market while building

The issue: Product teams expect users to discover them naturally.

Basic go-to-market fundamentals for an MVP:

Create a pre-launch list and provide value to it.

Run small paid tests to validate your CAC vs. LTV hypotheses.

Seed early accounts and collect testimonials or case notes you can show new leads.

Mistake 10: The wrong partner

The issue: You outsource to the cheapest provider who provides code but not product thinking.

What to look for in a partner:

Signs of discovery and a focus on quantifiable results.

Case studies that demonstrate iteration and improved metrics, not just feature delivery.

A close communication cadence and joint KPI ownership.

If you would like an example of an outcome-focused partner, take a look at Pedals Up’s MVP and product strategy services.

Actual Case Study: Dropbox

Dropbox did something counterintuitive: instead of shipping a full sync engine first, they created a short demo video that explained the product’s “magic.” The result was a rapid, measurable spike in sign-ups and waitlist growth, which validated demand and permitted the team to invest in engineering at scale.

Why this matters:

- It converted product value into a measurable signal (waitlist growth).

- It reduced the time and money spent building unvalidated features.

- If your product’s underlying “magic” is experiential, try an explainer video or interactive prototype, not full production code.

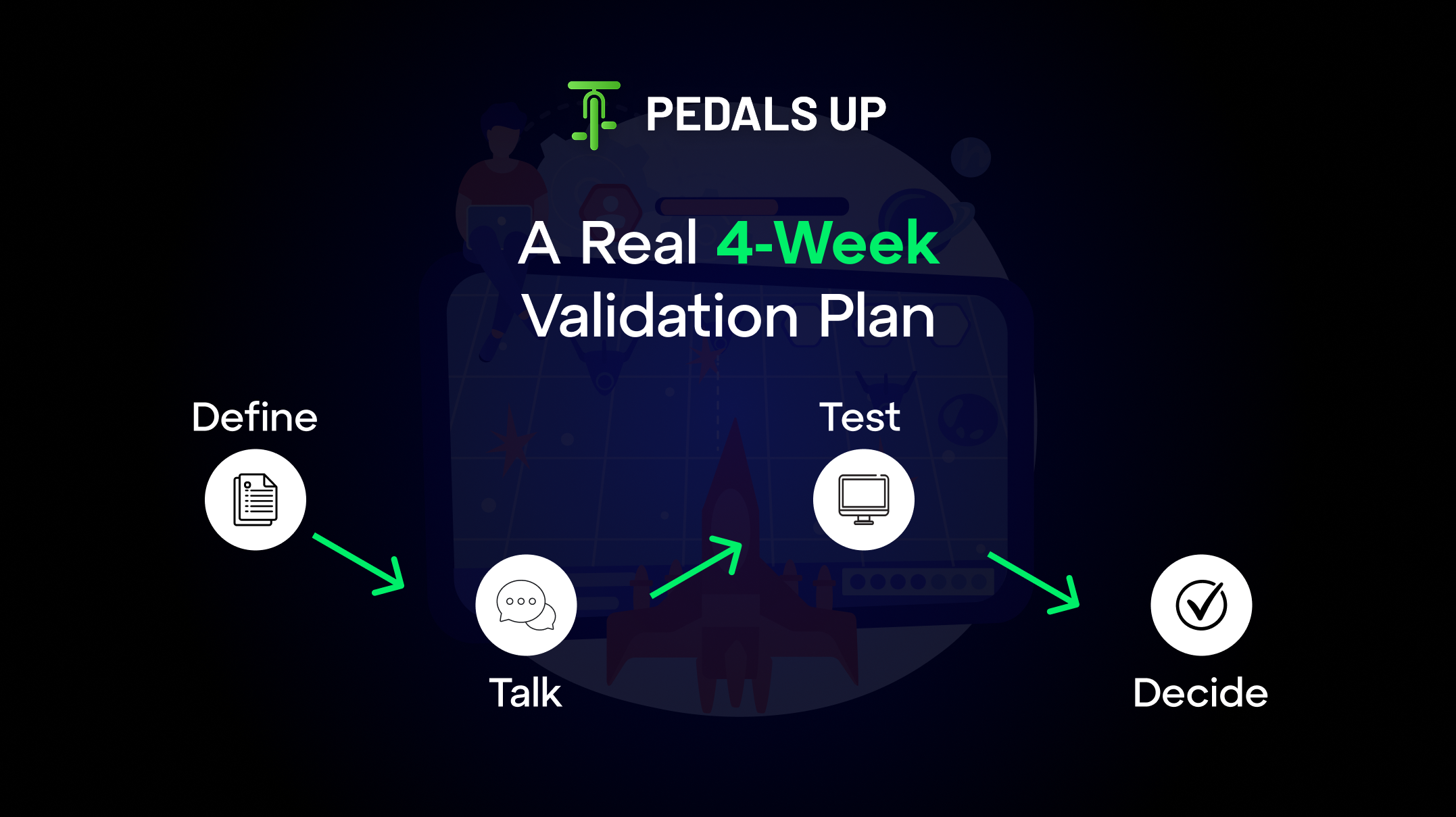

A Real 4-Week Validation Plan (Try This Now)

Week 1: Define

Create one testable hypothesis.

Chart out the user journey and the minimum flow needed to measure it.

Create a landing page with a clear call to action and simple tracking.

Week 2: Talk

Interview 12–15 target users using behavior-focused scripts.

Deploy an A/B test of the landing page, comparing two value propositions.

Week 3: Test

Test a small paid acquisition for customer acquisition cost (CAC) validation.

Perform guerrilla usability testing and prioritize the top three fixes.

Week 4: Decide

Observe activation, retention, and willingness-to-pay signals.

Pivot, persevere, or kill. If you’re going to continue, schedule a targeted engineering sprint with migration milestones.

Practical Checklist to Cut and Paste Into Your Roadmap

- One-sentence hypothesis (who, need, metric).

- Landing page with a call to action and tracking.

- 12–15 user interviews documented with direct quotes.

- Instrumented funnel (events and cohorts).

- Usability fixes shipped for the core flow.

- Two paid channel tests with CAC tracked.

- Migration notes and owners for re-platforming if validated.

Final Thoughts

An MVP is a measurement device. Your objective is to reduce uncertainty in the fastest, cheapest way possible. Avoid the ten common mistakes above, and you’ll be dramatically more likely to reach a clear decision—pivot, persevere, or kill—within weeks, not months.

Pedals Up pairs product strategy, UX, and engineering to help founders run these experiments cleanly and avoid costly technical and validation mistakes.

Ready to test your idea quickly? Schedule a consultation with Pedals Up and receive a personalized 4-week validation plan that confirms demand without burning your runway.

FAQ

Q: How expensive is an MVP?

A: Discovery-only tests can cost less than $5k; production-ready MVPs are highly variable based on integrations and compliance.

Q: Can I use no-code for an MVP?

A: Yes—validate your user experience and demand using no-code, then re-platform once you’ve confirmed product-market fit.

Q: What is the most critical metric?

A: The one that shows users are receiving the value you promised—usually activation or Week 1 retention.